/ News

Robotics: How machines see the world

Autonomous bots like self-driving cars don’t see the world like us. Frank Swain discovers why this could be a problem.

A system that allows the exterior of aircraft to "feel" damage or injury in a way similar to human skin is in development by BAE Systems.

Can you tell the difference between a human and a soda can? For most of us, distinguishing an average-sized adult from a five-inch-high aluminium can isn’t a difficult task. But to an autonomous robot, they can both look the same. Confused? So are the robots.

Last month, the UK government announced that self-driving cars would hit the roads by 2015, following in the footsteps of Nevada and California. Soon autonomous robots of all shapes and sizes – from cars to hospital helpers – will be a familiar sight in public. But in order for that to happen, the machines need to learn to navigate our environment, and that requires a lot more than a good pair of eyes.

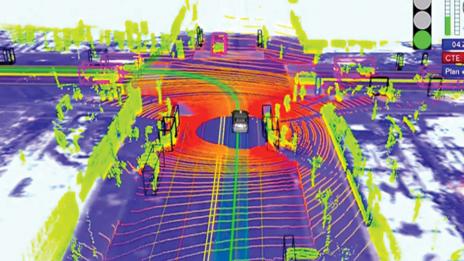

Robots like self-driving cars don’t only come equipped with video cameras for seeing what we can see. They can also have ultrasound – already widely used in parking sensors – as well as radar, sonar, laser, and infra red. These machines are constantly sending out flashes of invisible light and sound, and carefully studying the reflections to see their surroundings – such as pedestrians, cyclists and other motorists. You’d think that would be enough to get a comprehensive view, but there’s a big difference between seeing the world, and understanding it.

A scene generated by a robotic eye - but we find it much easier to interpret what's in it than they do

Which brings us back to the confusion between cans and pedestrians. When an autonomous car scans a person with its forward-facing radar, they show the same reflectivity as a soda can, explains Sven Beiker, executive director of the Center for Automotive Research at Stanford University. “That tells you that radar is not the best instrument to detect people. The laser or especially camera are more suited to do that.”

The trouble is the more eyes you add, the more and more abstract data points it adds for the robot’s brain to organise into a coherent picture of the world.

Just an illusion

We take for granted what goes into creating our own view of the road. We tend to think of the world falling onto retinas like the picture through a camera lens, but sight is much more complicated. “The whole visual system shreds images, breaks them up into maps of colour, maps of motion, and so on, and somehow then manages to reintegrate that,” explains Peter McOwan, a professor of computer science at Queen Mary, University of London. How the brain performs this trick is still a mystery, but it’s one he’s trying to replicate in robot brains by studying what happens when we have glitches in our own vision.

There are some images that our brains consistently put together incorrectly, and these are what we call optical illusions. McOwan is interested in optical illusions because if his mathematical models of vision can predict new ones, it's a useful indicator that the model is reflecting human vision accurately. “Optical illusions are intrinsically fascinating magic tricks from nature but at the same time they are also a way to test how good your model is,” he says.

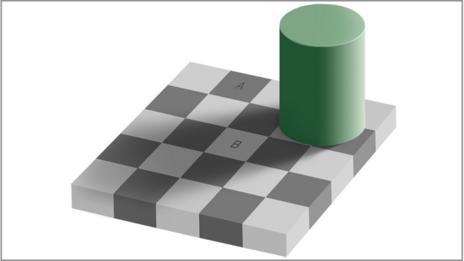

Most robots, for example, would not be fooled by the Adelson checkerboard illusion where we think two identical grey squares are different shades:

The squares marked A and B are the same shade of grey (Wikipedia)

“Humans looking at this illusion process the image and remove the effect of the shadow, which is why we end up seeing the squares as different shades of grey,” explains McOwan.

Although it might seem like the machine wins this round, robots have problems recognising shadows and accounting for the way they change the landscape. “Computer vision suffers really badly when there are variations in lighting conditions, occlusions and shadows,” says McOwan. “Shadows are very often considered to be real objects.”

Hijack alert

This is why autonomous vehicles need more than a pair of suitably advanced cameras. Radar and laser scanners are necessary because machine intelligences need much more information to recognise an object than we do. It’s not just places and objects that robots need to recognise. To be faithful assistants and useful workers, they need to recognise people and our intentions. Military robots need to correctly distinguish enemy soldiers from frightened civilians, and care robots need to recognise not just people but their emotions – even if (perhaps especially if) we’re trying to disguise them. All of these are pattern-recognition problems.

The contextual awareness needed to safely navigate the world is not to be taken lightly. Beiker gives the example of a plastic ball rolling into the road. Most human drivers would expect that a child might follow it, and slow down accordingly. A robot can too, but distinguishing between a ball and a plastic bag is difficult, even with all of their sensors and algorithms. And that’s before we start thinking about people who might set out to intentionally distract or confuse a robot, tricking it into driving onto the pavement or falling down a staircase. Could a robot recognise a fake road diversion that might be a prelude to a theft or a hijacking?

Military bots are currently guided by humans, but autonomy may grow in the future (SPL)

McOwan isn’t overly worried by the prospect of criminals sabotaging autonomous machines. “It’s more important that a robot acts predictably to the environment and social norms rather than correctly,” he says. “It’s all about what you would do, and what you would expect a robot to do. At the end of the day if you step into a self-driving car, you are at the mercy of the systems surrounding you.”

No technology on Earth is 100% safe, says Beiker, but he questions the focus on making sure everything works safely, rather than focus on what makes it work. “I found it amazing how much time the automotive industry spends on things they don’t want to happen compared to the time they spend on things they do want to happen,” he says.

He admits that for the foreseeable future we have to have a human monitoring the system. “It’s not realistic to say any time soon computers will take over and make all decisions on behalf of the driver. We’re not there yet.”

If you would like to comment on this, or anything else you have seen on Future, head over to our Facebook or Google+ page, or message us on Twitter.

Source: http://www.bbc.com/future/story/20140822-the-odd-way-robots-see-the-world

/ About us

Founded by Russian entrepreneur Dmitry Itskov in February 2011 with the participation of leading Russian specialists in the field of neural interfaces, robotics, artificial organs and systems.

The main goals of the 2045 Initiative: the creation and realization of a new strategy for the development of humanity which meets global civilization challenges; the creation of optimale conditions promoting the spiritual enlightenment of humanity; and the realization of a new futuristic reality based on 5 principles: high spirituality, high culture, high ethics, high science and high technologies.

The main science mega-project of the 2045 Initiative aims to create technologies enabling the transfer of a individual’s personality to a more advanced non-biological carrier, and extending life, including to the point of immortality. We devote particular attention to enabling the fullest possible dialogue between the world’s major spiritual traditions, science and society.

A large-scale transformation of humanity, comparable to some of the major spiritual and sci-tech revolutions in history, will require a new strategy. We believe this to be necessary to overcome existing crises, which threaten our planetary habitat and the continued existence of humanity as a species. With the 2045 Initiative, we hope to realize a new strategy for humanity's development, and in so doing, create a more productive, fulfilling, and satisfying future.

The "2045" team is working towards creating an international research center where leading scientists will be engaged in research and development in the fields of anthropomorphic robotics, living systems modeling and brain and consciousness modeling with the goal of transferring one’s individual consciousness to an artificial carrier and achieving cybernetic immortality.

An annual congress "The Global Future 2045" is organized by the Initiative to give platform for discussing mankind's evolutionary strategy based on technologies of cybernetic immortality as well as the possible impact of such technologies on global society, politics and economies of the future.

Future prospects of "2045" Initiative for society

2015-2020

The emergence and widespread use of affordable android "avatars" controlled by a "brain-computer" interface. Coupled with related technologies “avatars’ will give people a number of new features: ability to work in dangerous environments, perform rescue operations, travel in extreme situations etc.

Avatar components will be used in medicine for the rehabilitation of fully or partially disabled patients giving them prosthetic limbs or recover lost senses.

2020-2025

Creation of an autonomous life-support system for the human brain linked to a robot, ‘avatar’, will save people whose body is completely worn out or irreversibly damaged. Any patient with an intact brain will be able to return to a fully functioning bodily life. Such technologies will greatly enlarge the possibility of hybrid bio-electronic devices, thus creating a new IT revolution and will make all kinds of superimpositions of electronic and biological systems possible.

2030-2035

Creation of a computer model of the brain and human consciousness with the subsequent development of means to transfer individual consciousness onto an artificial carrier. This development will profoundly change the world, it will not only give everyone the possibility of cybernetic immortality but will also create a friendly artificial intelligence, expand human capabilities and provide opportunities for ordinary people to restore or modify their own brain multiple times. The final result at this stage can be a real revolution in the understanding of human nature that will completely change the human and technical prospects for humanity.

2045

This is the time when substance-independent minds will receive new bodies with capacities far exceeding those of ordinary humans. A new era for humanity will arrive! Changes will occur in all spheres of human activity – energy generation, transportation, politics, medicine, psychology, sciences, and so on.

Today it is hard to imagine a future when bodies consisting of nanorobots will become affordable and capable of taking any form. It is also hard to imagine body holograms featuring controlled matter. One thing is clear however: humanity, for the first time in its history, will make a fully managed evolutionary transition and eventually become a new species. Moreover, prerequisites for a large-scale expansion into outer space will be created as well.

Key elements of the project in the future

• International social movement

• social network immortal.me

• charitable foundation "Global Future 2045" (Foundation 2045)

• scientific research centre "Immortality"

• business incubator

• University of "Immortality"

• annual award for contribution to the realization of the project of "Immortality”.

LinkedIn

LinkedIn

LiveJournal

LiveJournal

Google

Google

Twitter

Twitter

Facebook

Facebook

Я.ру

Я.ру

ВКонтакте

ВКонтакте

Mail.ru

Mail.ru